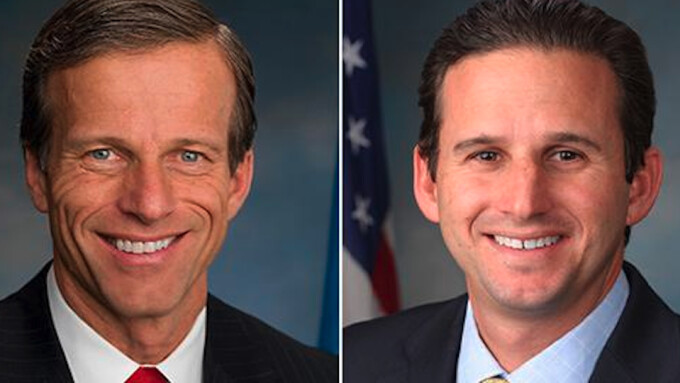

WASHINGTON — Two U.S. senators, Brian Schatz (D-Hawaii) and John Thune (R-S.D.), have introduced the Platform Accountability and Consumer Transparency (PACT) Act, a new piece of legislation aiming to update Section 230 of the Communications Decency Act.

Schatz and Thune are the Ranking Member and Chairman of the Subcommittee on Communications, Technology, Innovation and the Internet.

According to a statement by Schatz’s office, “the PACT Act will strengthen transparency in the process online platforms use to moderate content and hold those companies accountable for content that violates their own policies or is illegal.”

Schatz explained that “Section 230 was created to help jumpstart the internet economy, while giving internet companies the responsibility to set and enforce reasonable rules on content. But today, it has become clear that some companies have not taken that responsibility seriously enough."

“Our bill updates Section 230 by making platforms more accountable for their content moderation policies and providing more tools to protect consumers,” Schatz added.

Thune stated that “there is a bipartisan consensus that Section 230, which governs certain internet use, is ripe for reform.”

“There is also a bipartisan concern that social media platforms are often not transparent and accountable enough to consumers with respect to the platform’s moderation of user-generated content,” Thune added, making it explicit that for both senators the regulation of internet speech is a political issue.

Thune summarized some aspects of the PACT Act, saying that it will “require technology companies to have an acceptable use policy that reasonably informs users about the content that is allowed on platforms and provide notice to users that there is a process to dispute content moderation decisions.”

Thune tried to position the bill he is co-sponsoring as a middle ground between the current status quo and the more extreme calls for government intervention on internet content.

“The internet has thrived because of the light touch approach by which it’s been governed in its relatively short history,” Thune stated. “By using that same approach when it comes to Section 230 reform, we can ensure platform users are protected, while also holding companies accountable.”

The PACT Act According to Its Sponsors

According to its sponsors, the Schatz-Thune PACT Act “creates more transparency” by:

- Requiring online platforms to explain their content moderation practices in an acceptable use policy that is easily accessible to consumers

- Implementing a quarterly reporting requirement for online platforms that includes disaggregated statistics on content that has been removed, demonetized, or deprioritized

- Promoting open collaboration and sharing of industry best practices and guidelines through a National Institute of Standards and Technology-led voluntary framework.

The PACT Act, the sponsors claim, will hold platforms accountable by:

- Requiring large online platforms to provide process protections to consumers by having a defined complaint system that processes reports and notifies users of moderation decisions within 14 days and allows consumers to appeal online platforms’ content moderation decisions within the relevant company

- Amending Section 230 to require large online platforms to remove court-determined illegal content and activity within 24 hours

- Allowing small online platforms to have more flexibility in responding to user complaints, removing illegal content, and acting on illegal activity, based on their size and capacity.

But the PACT Act also provides for new, extensive government intervention on online platforms. In the words of its sponsors, the PACT act will expand government and court powers of intervention by:

- Exempting the enforcement of federal civil laws from Section 230 so that online platforms cannot use it as a defense when federal regulators, like the Department of Justice and Federal Trade Commission, pursue civil actions for online activity

- Allowing state attorneys general to enforce federal civil laws against online platforms that have the same substantive elements of the laws and regulations of that state

- Requiring the Government Accountability Office to study and report on the viability of an FTC-administered whistleblower program for employees or contractors of online platforms.

Troubling Definitions

The actual text of the PACT Act shared by Schatz’s office also includes some troubling definitions that might open the door to state censorship.

The PACT Act defines ‘‘illegal activity’’ for the purpose of content moderation as “activity conducted by an information content provider that has been determined by a Federal or State court to violate Federal criminal or civil law.”

It also defines “illegal content” for the purposes of moderation, prohibition or deletion as “information provided by an information content provider that has been determined by a Federal or State court to violate (a) Federal criminal or civil law; or (b) State defamation law.”

The PACT Act also requires platforms to “explain the means by which users can notify the provider of potentially policy-violating content, illegal content, or illegal activity,” mandating “a live company representative to take user complaints through a toll-free telephone number during regular business hours for not fewer than 8 hours per day and 5 days per week” and “an email address or relevant intake mechanism to handle user complaints.”

The PACT Act then requires internet service providers and platforms that have received “notice of illegal content or illegal activity on the interactive computer service” to “remove the content or stop the activity within 24 hours of receiving that notice, subject to reasonable exceptions based on concerns about the legitimacy of the notice.”

While the rationale given for these requirements and broad expansion of liability of ISPs and platforms includes instances of “revenge porn,” the way Schatz and Thune have worded the bill, it opens the door for an elaborate system of censorship combining what individual courts consider “illegal,” and individual instances of deletion, feeding a funnel of complaints that platforms would have to devote potentially unlimited amounts of funds and labor hours to act on, under the threat of penalties.

The aforementioned definition of “illegal content" — which does not account by any process of appeals or judicial debate about the supposed “illegality” of certain matters — also opens the door to instances of state censorship of content, putting it in the hands of individual judges.

If the PACT Act passes as worded, it could create a de facto system of state censorship regulated by the "lowest common moral denominator" of any state judge.

Schatz, TechCrunch reported, said the proposal was prompted in part by “an appetite to legislate.”

“Here’s why we think this bill is significant,” Schatz said. “First, because we believe it is the most serious effort to retain what works in 230, and try to fix what is broken about 230. Second, you have the chair and ranking member of the subcommittee introducing the bill, which is not a trivial matter. And third, because we do think there is an appetite to legislate here."

"Though the volume gets turned up when someone wants to beat up on the platforms via cable TV or Twitter, the serious work of the Commerce Committee has always been bipartisan," Schatz concluded.

Industry Attorneys Weigh In

Adult industry attorney and First Amendment expert Larry Walters of the Walters Law Group called the PACT Act "probably the most palatable of the various proposals to reform Section 230."

The obligation to remove content found to be illegal by state or federal courts, Walters pointed out, "is already largely honored by the major online platforms. They also have written acceptable use policies and content moderation appeal procedures in place."

However, he continued, "allowing state and federal authorities to hold platforms liable for user-submitted content is a dangerous proposal, which could result in some platforms shutting down or never launching due to the increased legal risks. The policy reasons for granting immunity to platforms for user content are not minimized by the fact that a state or federal agency is bringing the claim."

Requiring a live company representative to take complaints by telephone, Walters said, seems "counter-productive in the Digital Age where most complaints must be accompanied by a specific link to be effective. The idea of a user reading off a hundred character link to a live customer service representative is laughable."

"If Congress is intent on amending Section 230, perhaps this bill could be tweaked so it will do minimal harm to online innovation and freedom. Fortunately, the proposal does not seek to end encryption, which has been a deal-killer in previous legislative efforts," Walters added.

Another noted industry attorney, Corey Silverstein, also flagged what he saw as "problematic language," adding that he sees "First Amendment issues all over" the proposed PACT Act.

Main image: John Thune (R-S.D.) and Brian Schatz (D-Hawaii)